I am a third-year Ph.D. candidate in the Electrical Engineering Department at Yale University. I am a member of Intelligent Computing Lab, advised by Prof. Priya Panda. My research interests include efficient deep learning models and systems and biology-inspired artificial intelligence. I have focused on neural network quantization for efficient inference, neuromorphic AI with spiking neural networks, and bio-plausible optimization methods. My research is applied to the lastest topics like Diffusion Models and Large Language Models.

Prior to Yale, I graduated from UESTC in 2020. I did research assistant/internship at the National University of Singapore, SenseTime, and Amazon. I received the 2023 Baidu PhD Fellowship.

News

2024

- Apr: I had a talk in CoCoSyS.

- Mar: I passed my area exam and advanced to candidacy.

- Feb: A paper about ANN-SNN conversion has been accepted by IJCV.

- Jan: I received the 2023 Baidu PhD Fellowship (10 winners/year).

2023

- Sep: A paper about SNN with dynamic timesteps is accepted to NeurIPS 2023 and a paper about Post-Training Quantization in LLM is accepted to EMNLP 2023.

- Aug: I will serve as the Area Chair for EMNLP 2023.

- May: A paper about SNN with surrogate module learning is accepted to ICML 2024.

Selected Publications

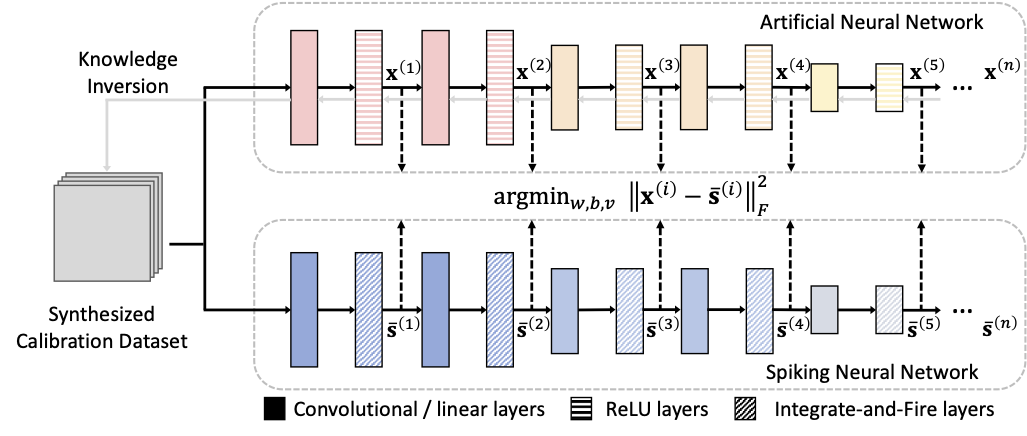

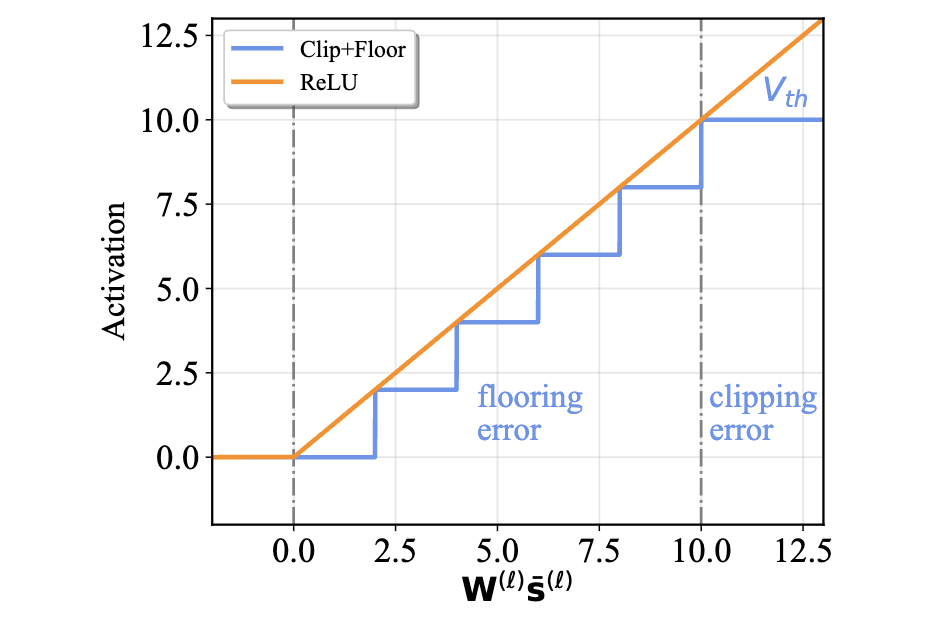

Error-Aware Conversion from ANN to SNN via Post-training Parameter Calibration

Error-Aware Conversion from ANN to SNN via Post-training Parameter Calibration

Authors: Yuhang Li, Shikuang Deng, Xin Dong, Shi Gu International Journal of Computer Vision (2024): 1-24.

Paper Link

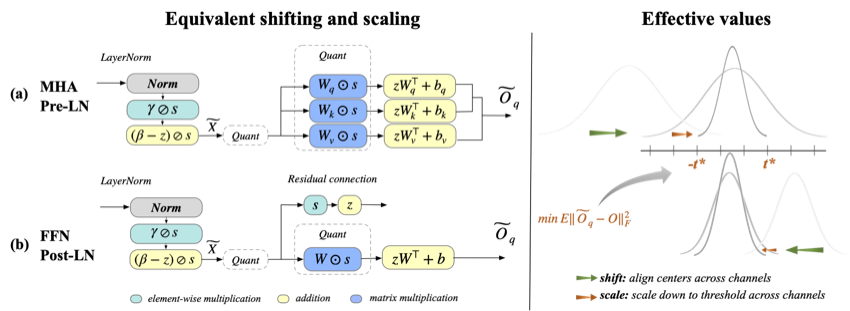

Outlier Suppression+: Accurate Quantization of Large Language Models by Equivalent and Optimal Shifting and Scaling

Outlier Suppression+: Accurate Quantization of Large Language Models by Equivalent and Optimal Shifting and Scaling

Authors: Xiuying Wei, Yunchen Zhang, Yuhang Li, Xiangguo Zhang, Ruihao Gong, Jinyang Guo, Xianglong Liu Conference on Empirical Methods in Natural Language Processing (2023).

Paper Link

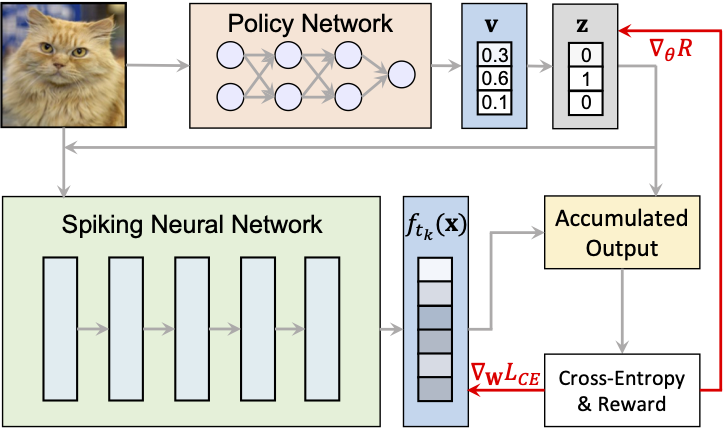

SEENN: Towards Temporal Spiking Early-Exit Neural Networks

SEENN: Towards Temporal Spiking Early-Exit Neural Networks

Authors: Yuhang Li, Tamar Geller, Youngeun Kim, Priyadarshini Panda Conference on Neural Information Processing System (2023).

Paper Link

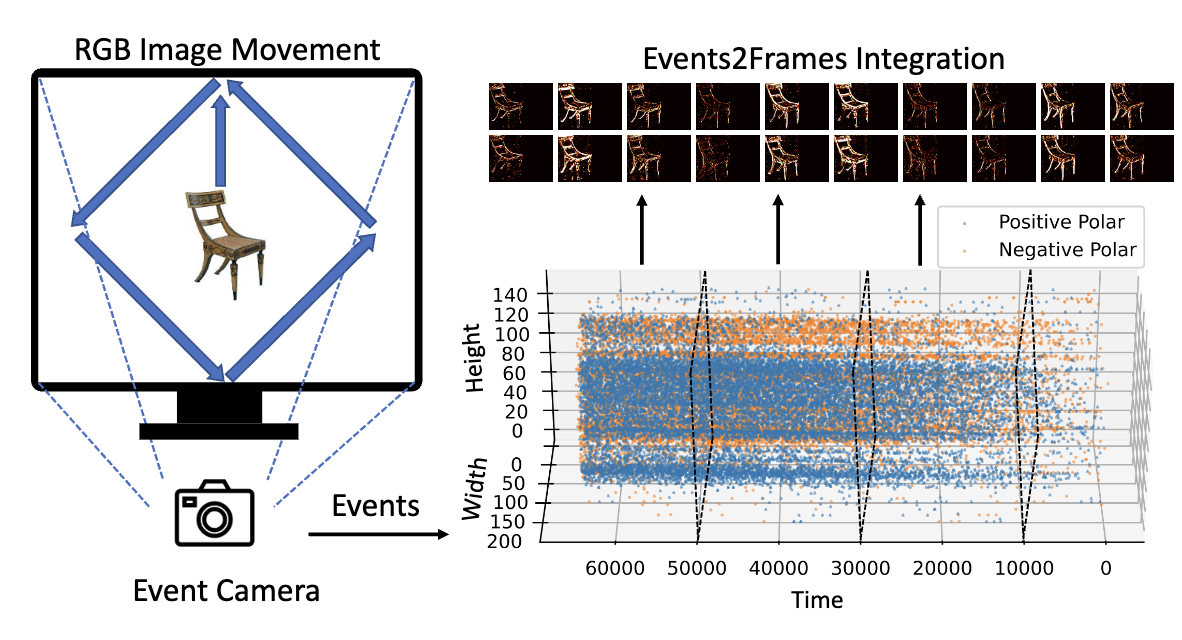

Neuromorphic Data Augmentation for Training Spiking Neural Networks

Neuromorphic Data Augmentation for Training Spiking Neural Networks

Authors: Yuhang Li, Youngeun Kim, Hyoungseob Park, Tamar Geller, Priyadarshini Panda European Conference on Computer Vision (2022). Paper Link

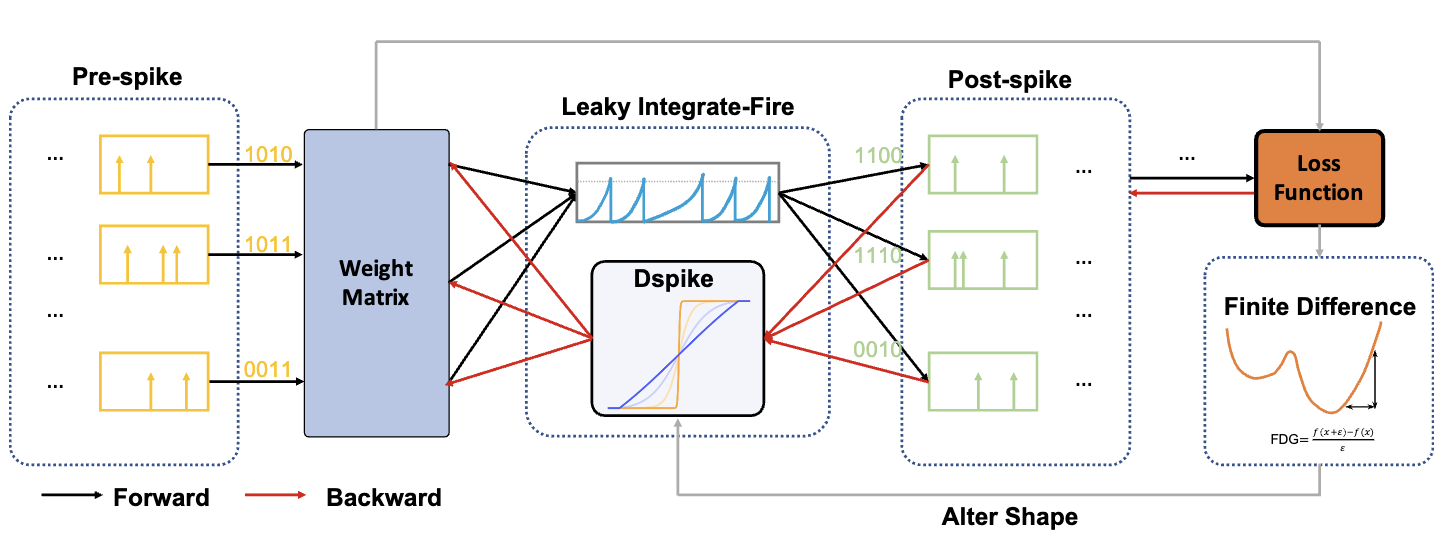

Differentiable spike: Rethinking gradient-descent for training spiking neural networks

Differentiable spike: Rethinking gradient-descent for training spiking neural networks

Authors: Yuhang Li, Yufei Guo, Shanghang Zhang, Shikuang Deng, Yongqing Hai, Shi Gu Conference on Neural Information Processing System (2021).

Paper Link

A free lunch from ANN: Towards efficient, accurate spiking neural networks calibration

A free lunch from ANN: Towards efficient, accurate spiking neural networks calibration

Authors: Yuhang Li, Shikuang Deng, Xin Dong, Ruihao Gong, Shi Gu International Conference on Machine Learning (2021).

Paper Link

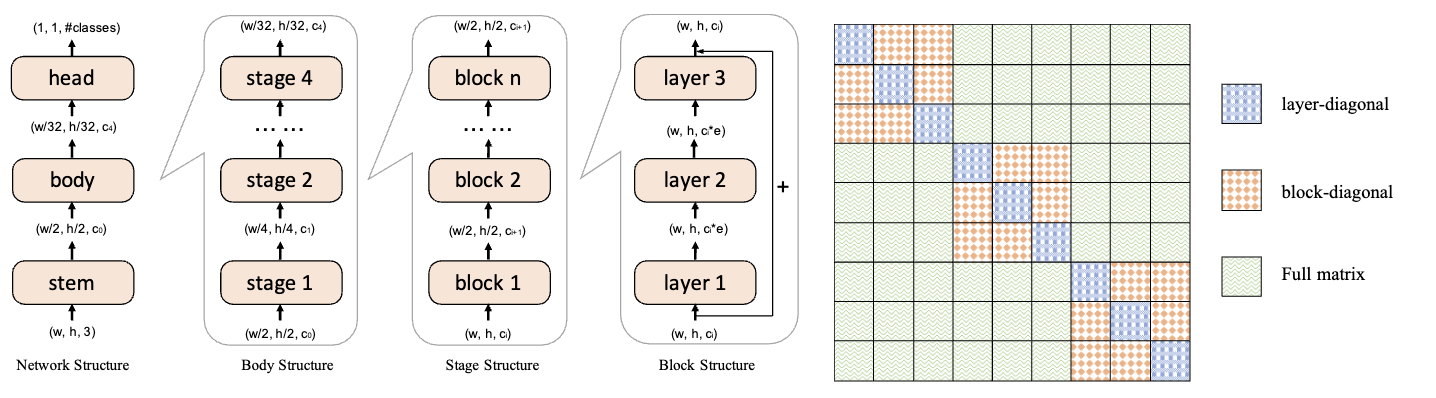

Brecq: Pushing the limit of post-training quantization by block reconstruction

Brecq: Pushing the limit of post-training quantization by block reconstruction

Authors: Yuhang Li, Ruihao Gong, Xu Tan, Yang Yang, Peng Hu, Qi Zhang, Fengwei Yu, Wei Wang, Shi Gu International Conference on Learning Representations (2021).

Paper Link

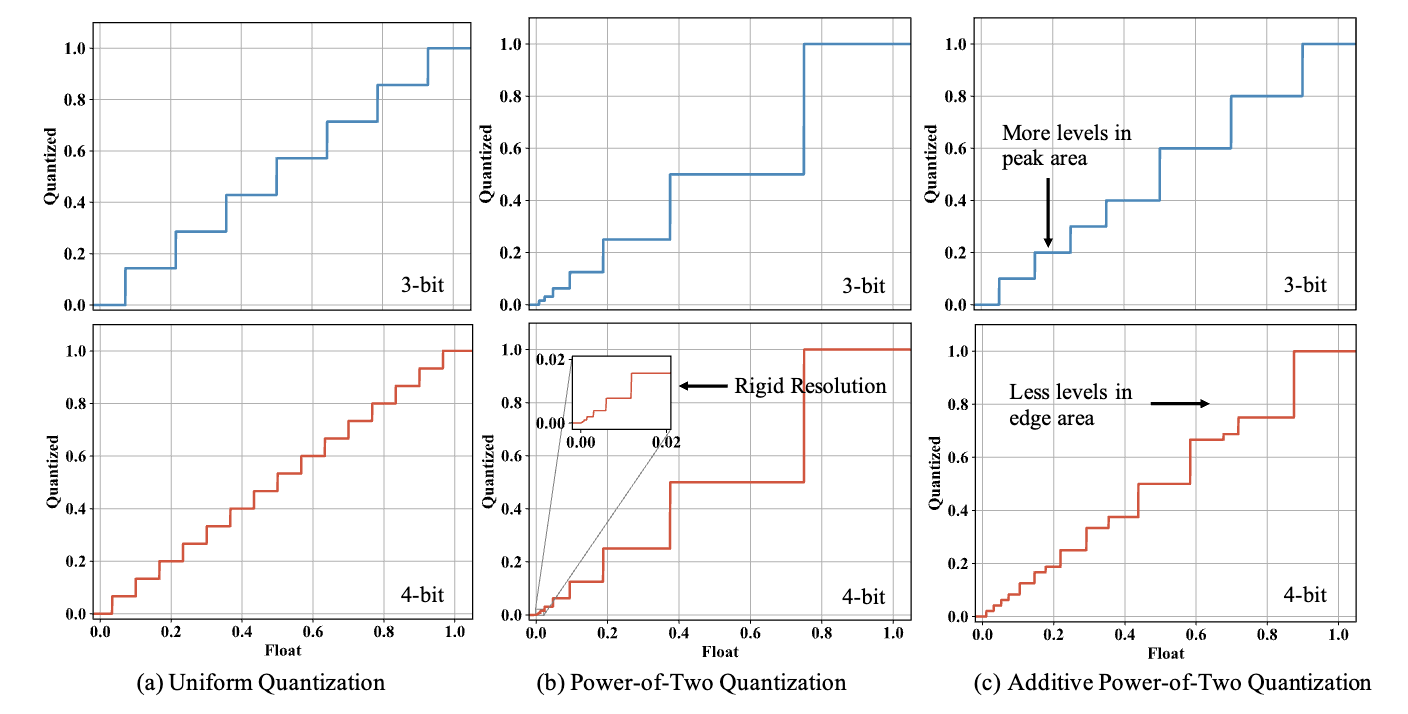

Additive powers-of-two quantization: An efficient non-uniform discretization for neural networks

Additive powers-of-two quantization: An efficient non-uniform discretization for neural networks

Authors: Yuhang Li, Xin Dong, Wei Wang International Conference on Learning Representations (2020).

Paper Link